It'southward non who has the best algorithm that wins; It's who has the most data — Andrew Ng.

Image classification is the chore of assigning an input epitome ane label from a fixed set of categories. This is 1 of the core bug in Computer Vision that, despite its simplicity, has a large variety of applied applications.

In this web log I volition be demonstrating how deep learning tin can exist practical even if we don't accept enough data. I have created my own custom automobile vs jitney classifier with 100 images of each category. The training set has 70 images while validation set makes upward for the xxx images.

Challenges

- Viewpoint variation. A single instance of an object can be oriented in many ways with respect to the camera.

- Scale variation. Visual classes often showroom variation in their size (size in the existent world, not just in terms of their extent in the prototype).

- Deformation. Many objects of involvement are not rigid bodies and can exist deformed in extreme ways.

- Occlusion. The objects of involvement can exist occluded. Sometimes only a modest portion of an object (as trivial as few pixels) could be visible.

- Illumination conditions. The effects of illumination are desperate on the pixel level.

cat vs dog image nomenclature

Applications

i.Stock Photography and Video Websites. Information technology's fueling billions of searches daily in stock websites. It provides the tools to make visual content discoverable by users via search.

2.Visual Search for Improved Product Discoverability. Visual Search allows users to search for similar images or products using a reference image they took with their camera or downloaded from cyberspace.

3.Security Manufacture. This emerging technology is playing one of the vital roles in the security industry. Many security devices have been developed that includes drones, security cameras, facial recognition biometric devices, etc.

iv.Healthcare Industry. Microsurgical procedures in the healthcare industry powered past robots utilise figurer vision and image recognition techniques.

5.Automobile Industry. Information technology can be used for decreasing the rate of road accidents, follow traffic rules and regulations in society, etc.

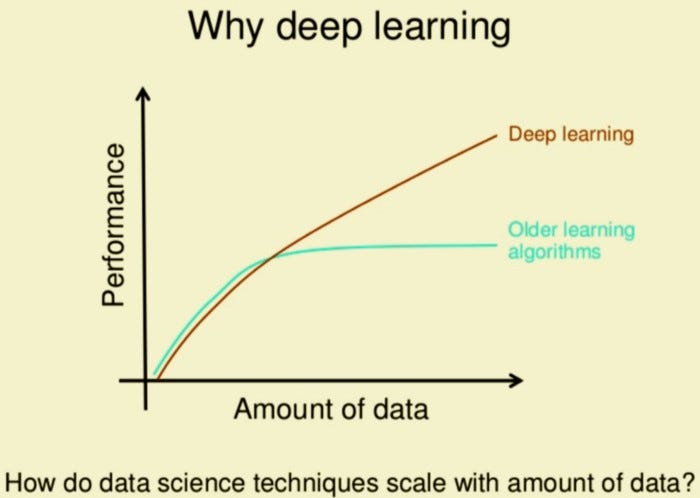

Model operation as a role of the amount of data

Surround and tools

- matplotlib

- keras

Information

This is a binary classification problem. I downloaded 200 images of which 100 are double-decker images and the balance are car images. For downloading the data, I have used this. I take split the data equally shown-

dataset train machine car1.jpg car2.jpg // bus bus1.jpg bus2.jpg // validation car car1.jpg car2.jpg // jitney bus1.jpg bus2.jpg //...

Image Classification

The complete paradigm nomenclature pipeline can exist formalized as follows:

- Our input is a training dataset that consists ofNorthward images, each labeled with i of 2 unlike classes.

- Then, nosotros use this training fix to railroad train a classifier to learn what every one of the classes looks like.

- In the stop, we evaluate the quality of the classifier by asking it to predict labels for a new set of images that it has never seen before. Nosotros will and so compare the true labels of these images to the ones predicted by the classifier.

Allow'southward get started with the code.

I started with loading keras and its various layers which will be required for building the model.

The adjacent pace was to build the model. This can be described in the following 3 steps.

- I used two convolutional blocks comprised of convolutional and max-pooling layer. I have used relu equally the activation function for the convolutional layer.

- On summit of it I used a flatten layer and followed it past 2 fully continued layers with relu and sigmoid as activation respectively.

- I have used Adam as the optimizer and cantankerous-entropy as the loss.

Data Augumentation

The practice ofData Augumentationis an effective way to increase the size of the grooming set. Augumenting the grooming examples allow the network to "see" more diversified, but still representative, datapoints during training.

The following code defines a set of augumentations for the grooming-set up:rotation,shift,shear,flip, andzoom.

Whenever the dataset size is small, data augmentation should be used to create additional preparation information.

Also I created a data generator to get our data from our folders and into Keras in an automatic style. Keras provides user-friendly python generator functions for this purpose.

Next I trained the model for l epochs with a batch size of 32.

Batch size is one of the almost of import hyperparameters to tune in deep learning. I prefer to use a larger batch size to train my models as it allows computational speedups from the parallelism of GPUs. Withal, it is well known that too large of a batch size will atomic number 82 to poor generalization. On the one extreme, using a batch equal to the entire dataset guarantees convergence to the global optima of the objective function. However this is at the price of slower convergence to that optima. On the other hand, using smaller batch sizes take been shown to accept faster convergence to proficient results. This is intuitively explained past the fact that smaller batch sizes let the model to start learning before having to come across all the data. The downside of using a smaller batch size is that the model is non guaranteed to converge to the global optima.Therefore it is ofttimes brash that one starts at a small batch size reaping the benefits of faster training dynamics and steadily grows the batch size through preparation.

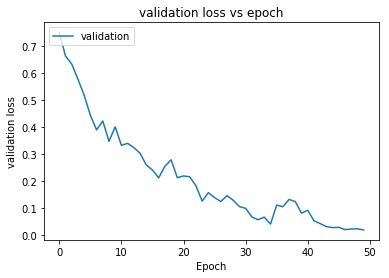

Let's visualize the loss and accuracy plots.

validation loss vs epoch

The model is able to reach 100% validation accuracy in fifty epochs.

Conclusions

Thus deep learning is indeed possible with less information. With just 100 images of each categories the model is able to accomplish 100% validation accuracy in 50 epochs. This model tin be extended for other binary and multi form epitome classification bug. One could fence that this was fairly easy as car and omnibus look quite different even for the naked eye. Tin we extend this and make a benign/malignant cancer classifier? Sure, nosotros can but the key is using data augmentation whenever data-set size is small. Another approach could be using transfer learning using pre-trained weights.

References/Further Readings

Transfer Learning for Epitome Classification in Keras

One stop guide to Transfer Learning

Transfer Learning vs Preparation from Scratch in Keras

Whether to transfer acquire or not ?

Don't Decay the Learning Charge per unit, Increase the Batch Size

Information technology is common practise to decay the learning rate. Hither nosotros bear witness ane can usually obtain the same learning curve on both…

NanoNets : How to use Deep Learning when you have Limited Information

Disclaimer: I'm edifice nanonets.com to help build ML with less data

Contacts

If y'all want to continue updated with my latest manufactures and projects follow me on Medium. These are some of my contacts details:

- Personal Website

- Medium Profile

- GitHub

- Kaggle

Happy reading, happy learning and happy coding!

Bio: Abhinav Sagar is a senior year undergrad at VIT Vellore. He is interested in data science, car learning and their applications to existent-world problems.

Original. Reposted with permission.

Related:

- How to Build Your Own Logistic Regression Model in Python

- Convolutional Neural Network for Chest Cancer Classification

- How to Hands Deploy Machine Learning Models Using Flask

DOWNLOAD HERE

Posted by: grantwhorty.blogspot.com

0 Komentar

Post a Comment